Malhar: Towards a fast, generic and minimal Fuzzy Search Index.

The most dangerous thing is the demise of our dreams. (Pash)

Information retrieval is one of the most used features of any computation system and underpins academic research, journalism, product discovery to name a few. Almost every project begins from a preliminary search about a topic or concept through some search interface. Given ubiquotous requirement of search interfaces for any information retrieval it still remains (ironically) difficult to encounter a great search interface. Use of word "Great" in the last sentence may seem subjective as preferences and intended usages could differ for different domains, but returning of some possible good matches given some query must be the norm for any search interface. For most of the consumers/users search interface would be a single entry to a new world, there remain other incentives at play to control an user's experience by implementing some biased search or applying dark-patterns to push some unwanted content!

Through this post i want to discuss a fuzzy search index which i have been developing for past few months from scratch with the idea of making it simpler to search through a random dump of content from any domain with good speed.

Main motivation is to develop a minimal(self contained library), robust(indexing directly at bytes level), scalable(run whole infrastructure even on a smartphone), flexible(ability to experiment) index, hence pushing the default bar set for search experience both for the developer and user.

No developer would want to include a lot more infrastructure just to make it easy to search through 10s or 100s of command line options, as developer has already done its job by providing the code/software in the first place. So another major motivation was to make such index "auxiliary", without any need to alter the existing infrastructure in any form.

How:

For some background, it is based on the Local sensitive hashing concept, which involves generating fixed size hashes given a sequence of bytes. Unlike cryptographic Hash, hashes are generated to preserve locality information encountered in the original data. Any such two hashes could then be compared to get an idea of how similiar (literally) the original content would have been. Although the fundamental idea remains constant, final implementation would involve a series of choices like "hash function used", "number of buckets" etc made for the domain any such hash is supposed to be used for.

If we were to think about using a fixed size sequence to represent a word or string, where could we start. English contains 26 characters, as the fundamental units to be able to represent any possible word and sentences by extension.

We could start with a 26 bit sequence, where zeroth bit represents presence or absence of a, 1st bit represents presence or absence of b and so on.

For word "abcd" our hash would look like 11110000000000000000000000, for word "aabbccdd", it would also be the same even though both spellings are quite different.

Then we may think of some way to encode the number of times a character has appeared in a given word. Actual number of times a character could appear in a word is impossible to predict, but we would make a choice (or add some bias). We can then argue (based on our not-so-mighty intution) that it is rare for a character to appear more than, say 4 times in any word.

This provides us with two levels of occurence frequency, either a character appears more than 4 times or less. 3 possible states now could be associated with any character and to unambiguously differentiate between them we would need atleast 2 bits.

While we are at it why not use extra bit to differentiate a character state further. for example 00 if character is not present in a word, 01 character appears but less than 3 times, 10 character appears more than 3 times but less than 7, 11 would mean character appears more than 7 times.

This may seem silly in current era of "deeper networks", but such hand-crafted features still work if you know how to use them !

Our hash now extends to 26 * 2 = 52 bits for each word/string after we encoded (or tried to) the frequency information in the hash.

There is still no provision to incorporate the "position" information in our hash, and in current state we would end up with similar hashes for each of these words "abcd", "bacd", "dcba" and remaining "21" combinations.

This would lead to n-gram indexing, which most of search infrastructures include to be able to implement some form of fuzzy searching.

if n = 1, this would mean retrieving all the "documents" with a particular character. This wouldn't make sense but it is easier to implement as number of possible unigrams are limited.

Using n = 2, would lead to around 26 x 26 combinations and much more useful than unigram indexing, and so on.. .

As far as i know, n = 3 is the magic number most non-trivial search infrastructures go for by generating a special trigram inverted index like structure. given some query all possible "3-grams" could be retrieved and search against the stored Index to retrieve possible "documents".

We would need a way to encode this n-gram information in hash. Say we use n=3, then also assuming our word can be "ascii" encoded, we would have uint8 value for each character in the word.

Given "hello", we should be able to encode "hel", "ell", "llo" into an array of 3 uint8 characters. We can then also have an array of 3 random integers/uint8 values and multiply these 2 arrays elementwise.

(forgetting overflow ) it would look like this:

# Assuming N = 3 , aka tri-gram.

# filter

FILTER = [17, 89, 245] # some random weights.

# given "hello" -> ["hel", "ell", "llo"]

hel = [11, 12, 15]

ell = [11, 15, 12]

llo = [17, 18, 19]

x1 = hel * FILTER # elementwise multiplication

x2 = ell * FILTER

x3 = llo * FILTER

c1 = sum(x1) % 255 # [a value b/w 0 - 255]

c2 = sum(x2) % 255

c3 = sum(x3) % 255

local_features = [c1, c2, c3]

# based on the values of c1, c2, c3 we may assign them an id(bucket) from predefined IDs to ensure a FIXED SIZE HASH.

Main motivation is to differentiate b/w n-grams, by reducing n-grams into a single number as we go along in the sliding fashion across a string/word. Some other parameters like stride length (overlapping window) are need to be decided.

This may remind you of convolution operation from deep-learning architecture, or signal-processing !

Such processing collects the local features and we already have global information from earlier, intermixing and fusing information would form the crux of the final comparison process.

Note that during final implementation, we end up with parameters like value of "N", which we could optimize further depending upon application.

I have explained other practical details about fuzzy-engine in this earlier post.

Once we have the ability to generate hashes for a given word/string we could use this ability to generate an "inverted-index" like structure for any document/paragraph. We tokenize/split the paragraph into individual words which are then "hashed" and mapped to a primary key/document id. User would be responsible for rendering the original document's content based on the returned key/id on querying the Fuzzy Index for some query.

# pseudo code

def tokenize(data:str) -> Iterable[str]:

pass

def index_data(primary_key:int, data:str):

for d in tokenize(data):

fuzzy_hash = generate_hash(d)

inverted_index[fuzzy_hash] = {...., primary_key} # add this key to a set.

def query(query:str) -> Iterable[Tuple[int, int]]:

relevant_keys, scores = inverted_index.get_stored_keys(query)

return (relevant_keys, scores)

# user can use returned relevant keys to actually access the corresponding document contents...

Library would not need to know any details about how the original text content is being stored, as long as user can generate an unique key for each of those documents. Actual text content may just be a list in memory, or may reside in some database or in the cloud storage like S3. Index becomes complementary and doesn't need to store original content or its copy in any form after initial indexing. This also allows index to be queried independently with very minimal infrastructure.

Hash-generation code is supposed to model the availability of a keyword encountered for a given document independently of the frequency or importance for that keyword. (i.e every extracted keyword is given equal weightage during indexing).

Further filtering should be done case by case basis by an user and is not of library's concern.

Sample Usage:

# an array of some text content. paragraphs = [p1, p2, ...] # populate db = FuzzyIndex( name = "wikisearch" ) for i, p in enumerate(paragraphs): db.update(key = i, data = p) print(db)

#query query = "taj mahal" top_k = 10 key_score = db.query(query = query, threshold = 150) for key, score in key_scores[:top_k]: print("{} : {}".format(paragraphs[key], score))

Implementation Details:

Full library is written in python along with some parts in Nim to speed up querying process, with no third party dependency. This makes it flexible enough to be embedded with an application or any script to provide a fuzzy search interface automatically !

But to make this work with non-trivial amount of resources, code must be fast enough be useful or have some means to scale up if need arises.

I delegated most of the bottleneck code to Nim to run it fast enough to be used in super real-time with around 150K hashes on a single core of my intel i5.

Using fixed-size hashes allows comparison code to use Cache more efficiently, atleast for Nim/C backend at the cost of extra work to expose it from and to python side.

Multi-threading still needs to be implemented which should speed up querying further, i will get around to it in some time.

Each generated hash is composed of 36 bytes and each hash comparison takes about 5 microseconds on my system, but we may filter some of the hashes based on the "query" first character.

For any indexed content, "comparison" of hashes, makes up the bulk of the runtime and has a log-like relationship with number of hashes stored for that content. Number of hashes would be result of "unique" words count collected for "vocabulary" generated during "indexing", and this "vocabulary" size tends to plateau even though number of documents/resource could be much larger. For a diverse english content like wikipedia, a vocabulary size of 500k would be a generous guess.

For now i am not using any "stemmer", that would reduce the vocabulary size by few percent, and more importantly would help us reduce the number of redundant matches like "point" vs "points".

One limitation is that first character is supposed to be correct when searching for a particular word in the indexed content. This could be solved by running a full brute search over all the hashes within a distance of already generated best hash.

But good tokenization helps in reducing the severity of this limitation!

Another limitation (or strength) is that hash generation works directly on bytes. I use utf8 encoding which is a variable type of encoding but is more memory-efficient compared to something like UTF32.

For example, word Ögedei's first character would need 2 bytes when encoded with "utf8", and this shifts each successive character(byte) 1 to the right in final encoding.

When compared against word Ogedei, this produces a low comparison score. (recall that N-gram score is generated by elementwise multiplication, extra 1 byte shift could sometimes drastically change the bucket ids being assigned! ).

Final score is a weighted fusion of global and local-features, and some bias gets also encoded due to "template string" chosen in pursuit of generating an useful hash from just few bytes .

Still, generated score would be within the expected threshold for most of such cases and hence would contain keys/ids pointing to document containing words like ogedei.

One solution is to find and use nearest "ascii" character for each unicode before indexing, or instead use a fixed size encoding like UTF32 .

As with all "hashing" techniques, there remains the possibility of a "collision" due to information lost during the hashing process. Since "comparison" happens in "HASHING" dimension, if two quite different spellings/words produce same hash we would have no way to distinguish among them. Given a fixed hash resolution (256 bits in our case), probability of false-positives would be directly proportional to the number of "hashes" stored. In our case we would instead have a "VOCABULARY" to hash, so this puts an upper bound to a false-positive rate for a given dataset. For smaller "vocabularies", we are supposed to have much lower false positive rates!

Note that this kind of fuzzy search is based on the stats collected/indexed during indexing phase and then comparing them as we type or query , sometimes current query would be substring of the actual string we desired. For example in case of a search for a movie like "handmaiden" in it, we may just want to search "hand" and hope to find all strings/content with "hand" as a substring. But the "stats" based context/hash would have information about "handmaiden" as a full word. This stark difference in the length of "query" and "stored" content is a major issue, but thing to note here is that even though the "query" is much smaller than content(string) we actually we wish to retrieve, "query" could be assumed to be a valid vocabulary word for most of the cases. This leads to the idea of using some GLOBAL stats table to figure out obvious substrings, before we hash the content. We could further divide the "content" into subwords if possible. For our example, we can hope "handmaiden" would be divided into "hand", "maiden". And what better way to extract universal context about a language than a NLP model trained on a very big dataset like common crawl or wikipedia.

Tokenization:

We would not incorporate a full NLP model, even though idea is nice, but every bit of code would add latency, introduce dependency and may not be practical for a production deployment, beating our main purpose!

We would just use a "tokenizer" to actually tokenize/divide the words further into subwords. In case you haven't come across this concept, it is a way to parse/consume a large amount of text to come up with prevelant patterns/subwords in a language. It starts with single characters/bytes and keep merging highest frequency pairs until it reach the desired vocabulary size or when all possible patterns are exhausted. Given enough vocabulary size and content, it would end with a nice vocabulary set, with popular unigrams, bigrams, trigrams, whole words and stuff conditioned on that content. This really makes it an ideal frontend to elevate search experience for any domain like a programming language or a spoken language.

It could be run on any raw-text/bytes irrespective of language, hence equally useful for any language with enough published content on the internet. Qualitatively, it would help the NLP model to handle Out Of Vocabulary words like "fatherhood", even though this exact word was never seen during training, tokenizer would hopefully tokenize it into "father" and "hood". Hence reducing the number of such "OOV" words drastically and still maintaining the semantic understanding.

For our use case, i just borrowed such a "vocabulary" generated by a tokenizer used in the "BERT" model from google. It has about 32k subwords, which we use to try to divide the content before hashing.

Note that all the ideas discussed here are applicable to all languages and not just english, as search is assumed to be a fundamental requirement regardless of content language. Further tweaks could easily be added to improve upon the baseline for a specific language !

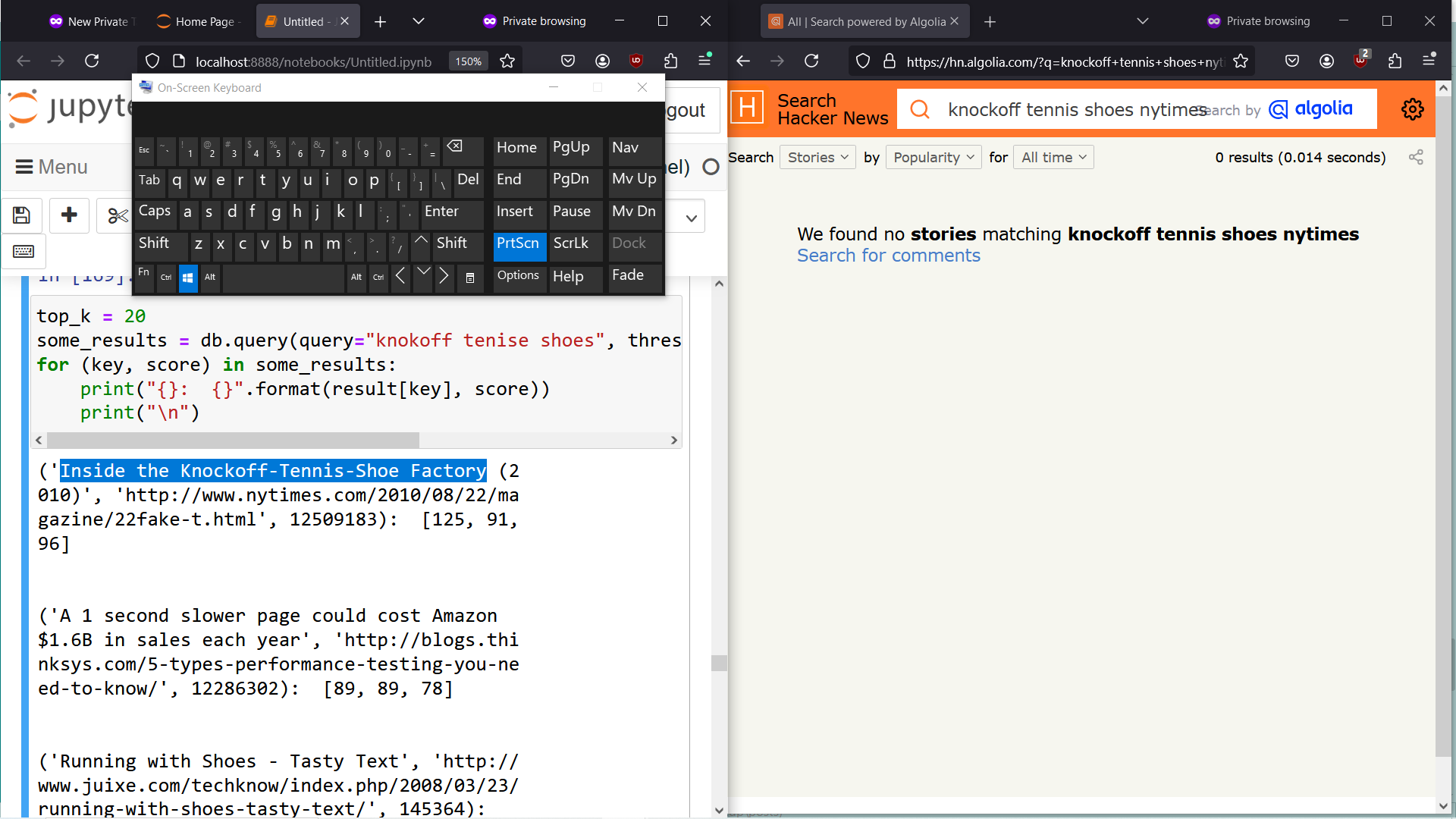

Real-World Performance and Comparison against Algolia:

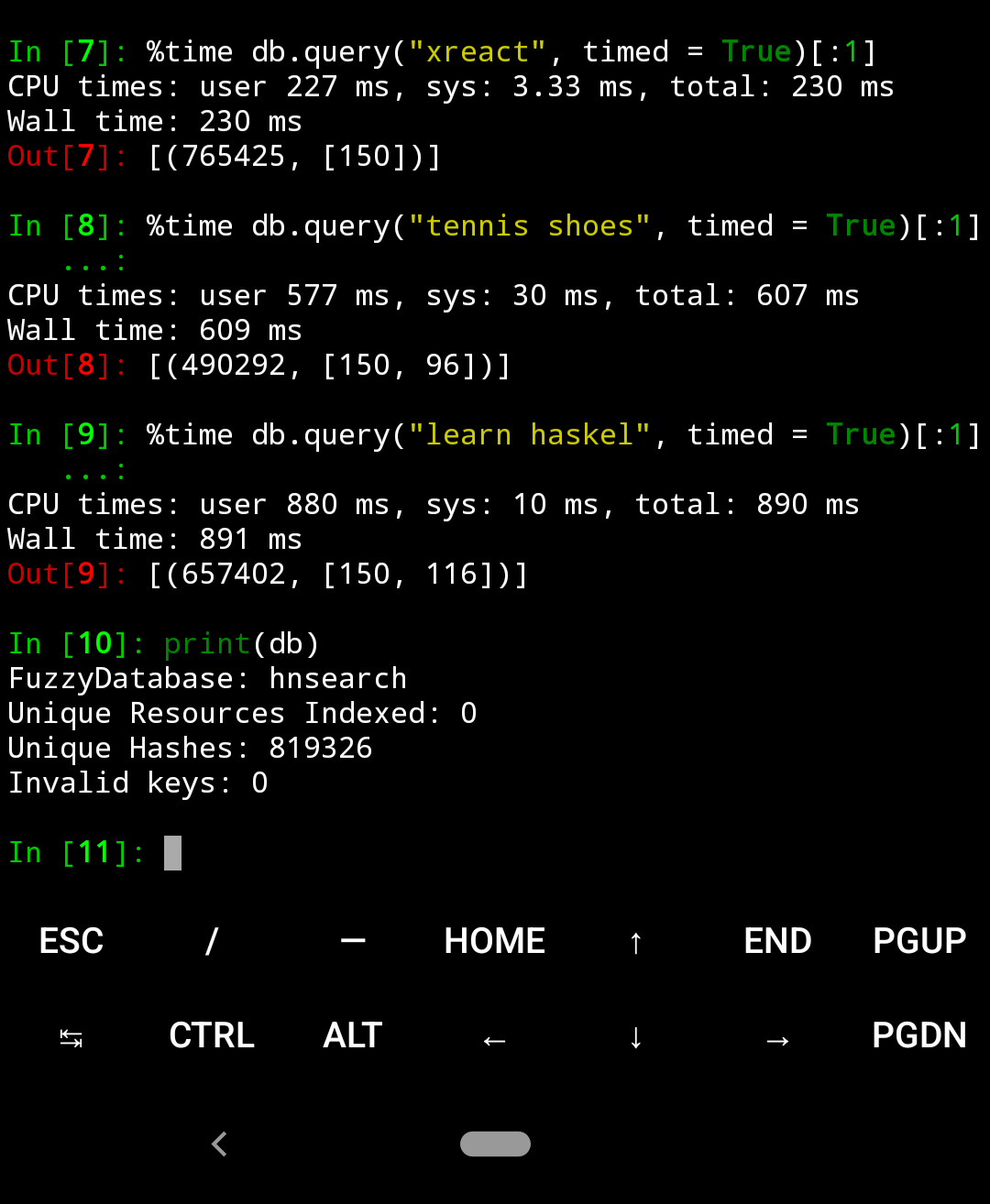

To be of practical use, preceding ideas and implementations must work on real-word search queries. For this i downloaded a Hacker News Dump from archive.org.

I indexed around 1 million stories by combining title and url of a submitted story. We don't need to do anything special to combine (string)content from different types of columns/lables, simple concatenation of data from different columns/labels is enough!

For example content = url + title + username + <any_other_string>, then db.index(content, key = <some_unique_key>).

It resulted in:

print(db)

FuzzyDatabase: hnsearch

Unique Resources Indexed: 969193

Unique Hashes: 819326

Invalid keys: 0

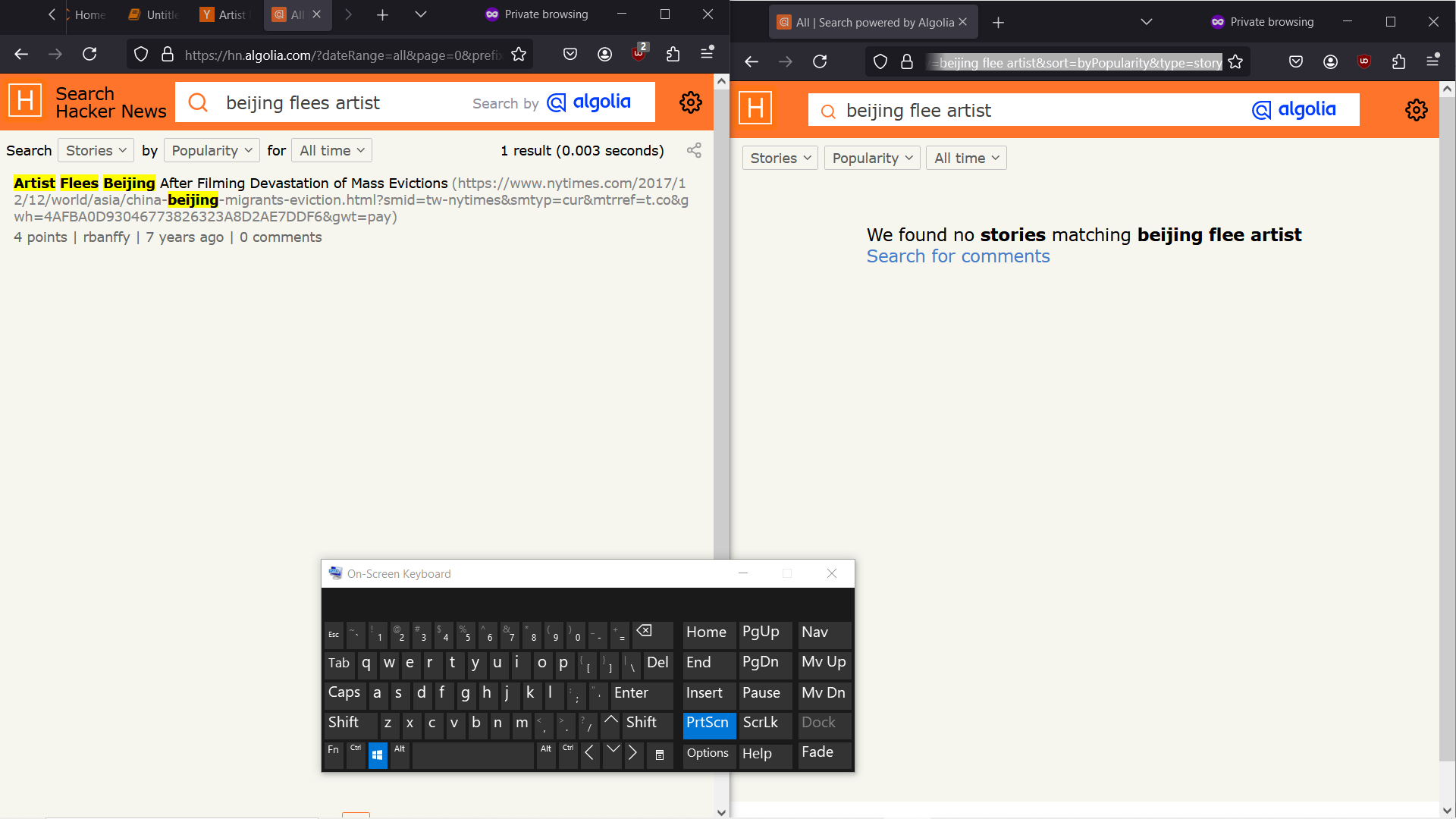

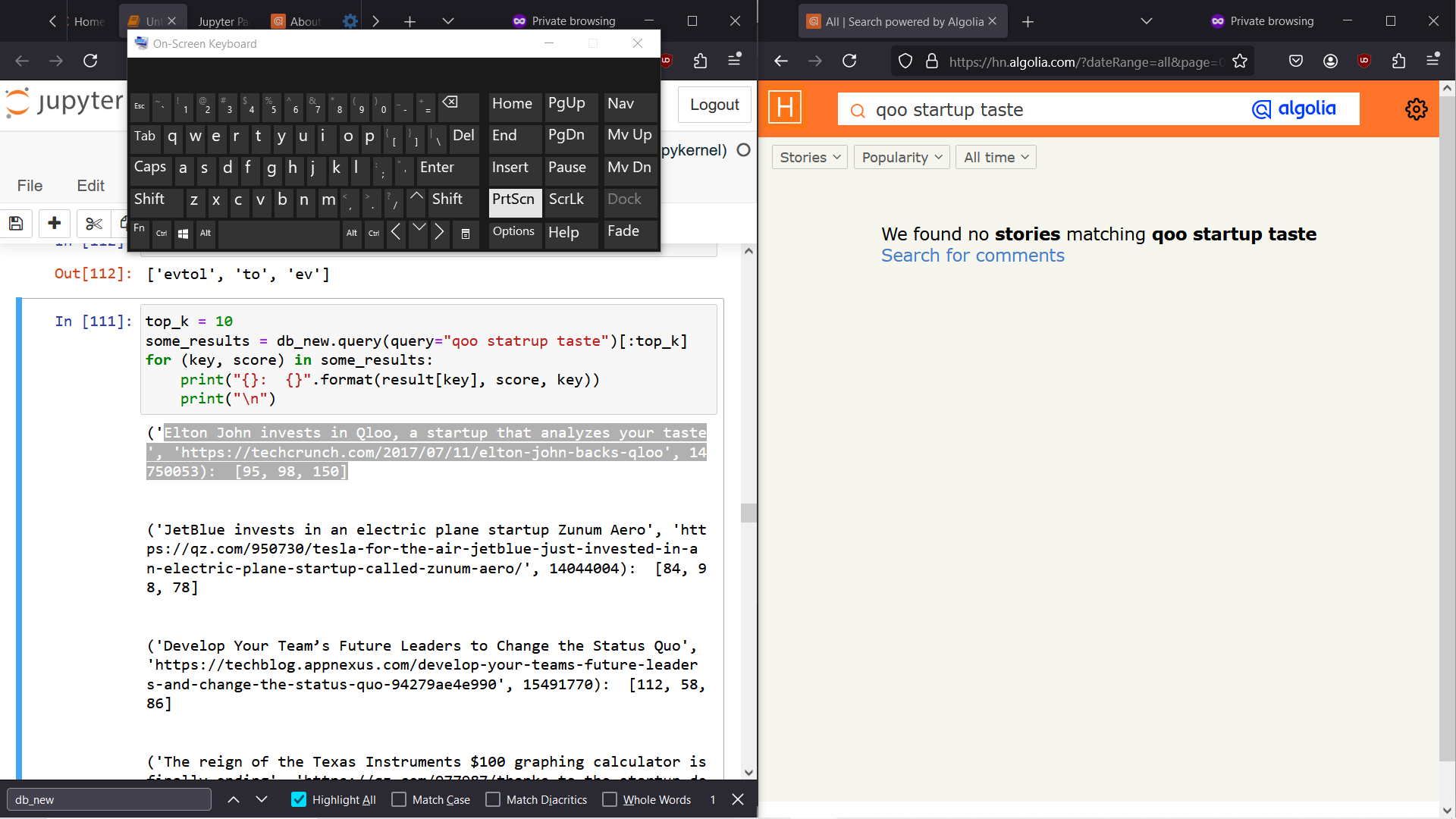

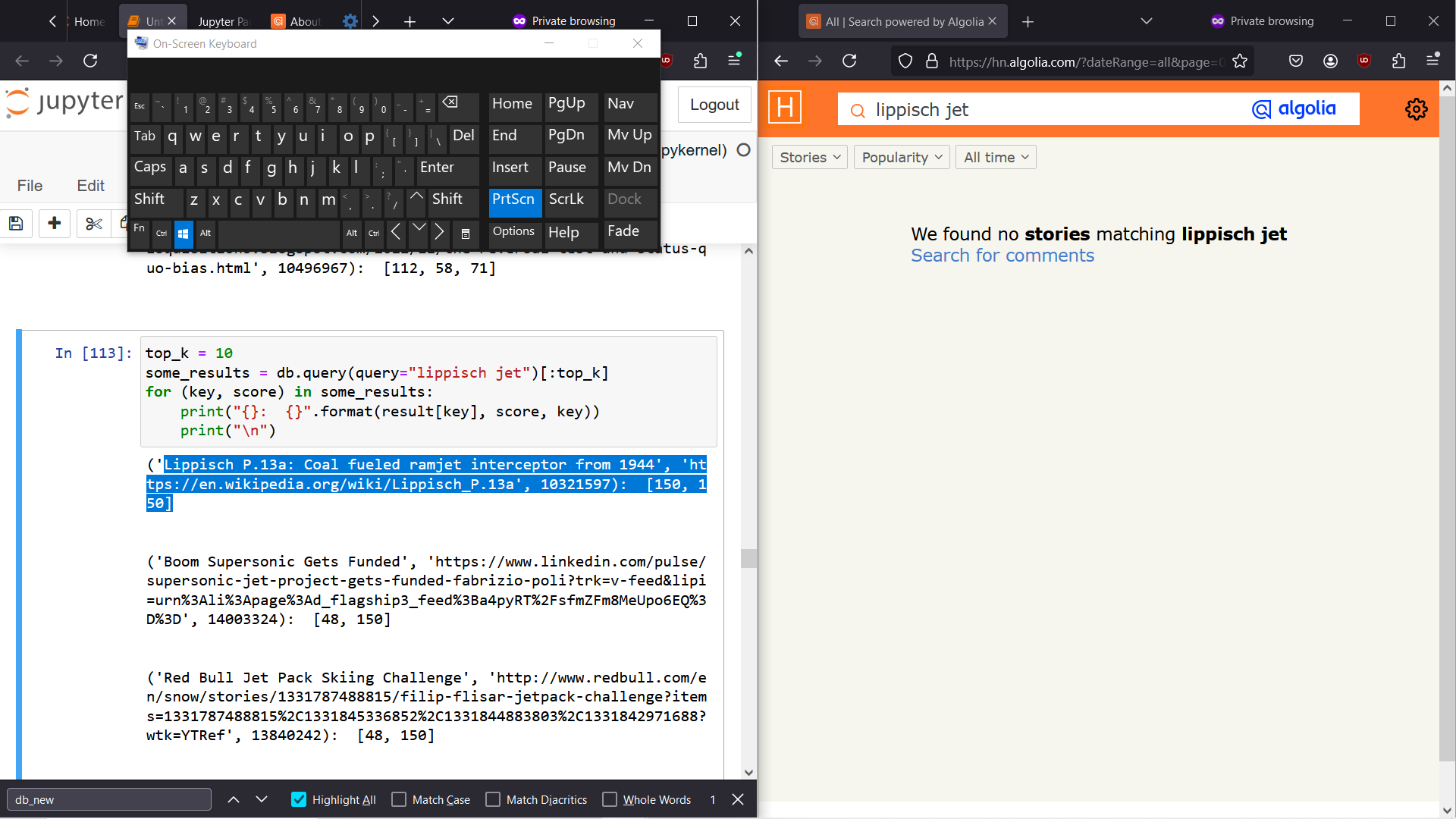

Algolia does a very decent job for providing (fuzzy) search for Hacker-news content in realtime, but falls short for cases with some random username, or non-trivial phrase. I suspect it is using a combination of bi-gram and tri-gram indices to handle typing mistakes upto a certain number of characters, hence sometimes an uncommon combination of characters may case it to return unexpected results.

It is also very annoying not to return any results at all for a user provided query and for some cases it just wouldn't return any results if we miss a single s, for example:

Since search is a highly personal and subjective matter it is very difficult to use a single metric to compare between any two approaches. But some of examples are shown below where our implementation seems cleary more robust to typing mistakes than Algolia. It is rational to expect/assume that only minor typos would be made by an user, but in reality users of different linguistic origins or geographies may end up using an interface, and hence initial assumptions made may not be valid anymore.

Latency ranges from 90ms(single token) to 800ms( 4-5 tokens), based on the count of tokens produced for a query. But it clearly has potential for real-time search, as (independent) latency for a single token remains around 90-250 ms. Note that whole implementation leverages only a single core of my quad-core CPU. Current query mechanism lends itself to map-reduce pattern, and hence can be easily parallelized , which i intend to work on in future.

RAM usage including the whole python Runtime is around 1.5Gb, this can be reduced significantly by offloading more code to a system language, as even None type takes 16 bytes for Cpython implementation. But i feel, even this RAM usage is reasonable, for a running a personal search infrastructure while allowing user to experiment easily by leveraging python dynamic nature and mature library ecosystem at the initial stages of development.

We can save the generated index by db.save("./hnmeta.json"). Resulting (Index).json file is around 195 Mb uncompressed and 90Mb compresssed. Note that this is just an auxiliary index, which doesn't store any string/original data and hence can be hosted on a completely different server. This upholds the incremental improvement principle, which can be crucial for large search infrastructures.

This index is portable by its very nature, and can be used to create a fresh FuzzyIndex by db_new = FuzzyIndex(file_path = "./hnmeta.json") on a new server. (as long as user can access original data referenced by key somehow)

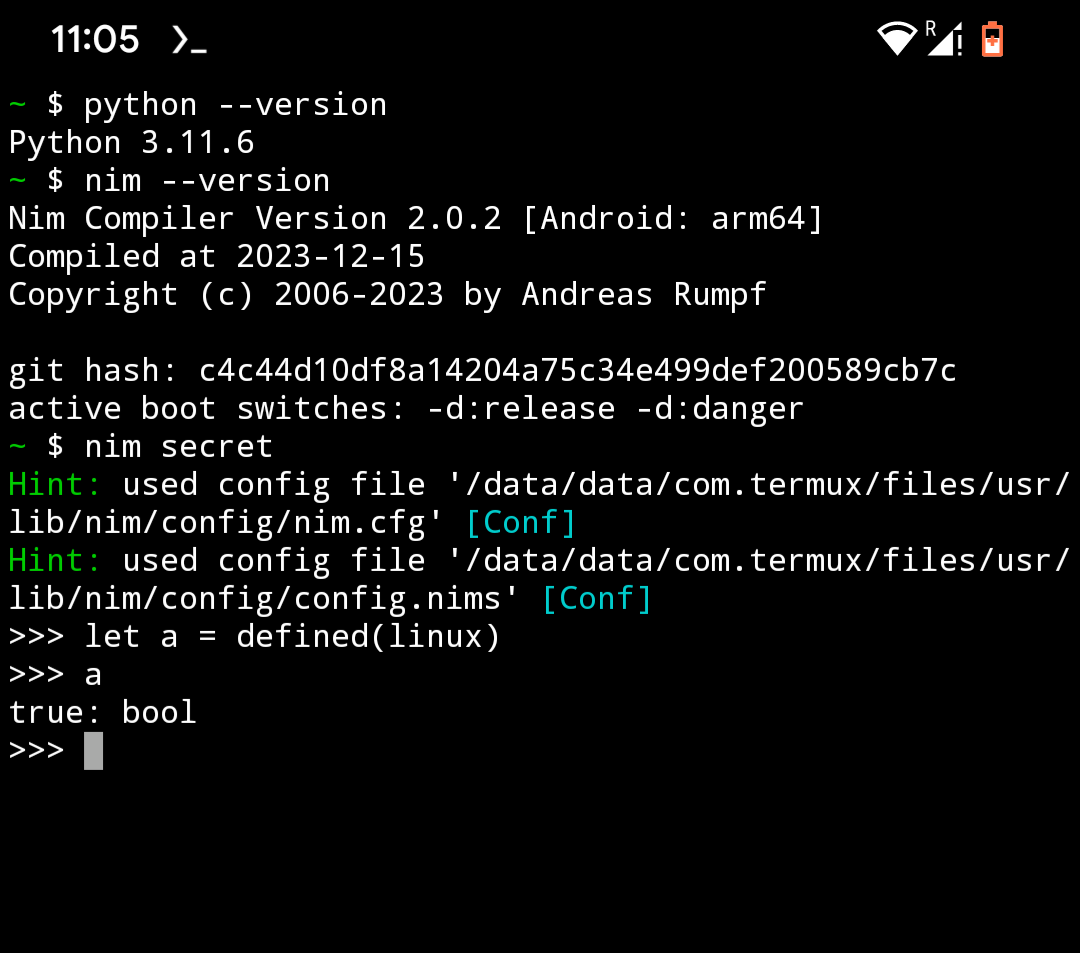

Running on a smartphone:

Since our implementation is self-contained, except python and nim well-tested standard libraries, it should work for each that platform where python and nim are supported. CPython itself is written in C, and hence it is very easy to compile python itself from even scratch for almost every platform. Nim leverages the llvm backend for C compilation to machine-code, like many other programming languages and hence can target a lot of target-triplets.

On android, termux ships both these packages and hence it is very easy to install both python3 and Nim.

I compiled the Nim code on my refurbished Pixel 3 to generate python extensions for aarch64 architecture.

Since we already generated an index which is just a .json file, we can now create a new in-memory index by parsing that file using db = FuzzyIndex(file_path = "hnmeta.json").

Below are some sample queries i ran. (this $100 smartphone has a lot more to offer as it has total 8 cores in bigLittle configuration!)

This allows us to treat any smartphone as potential node or server fully capable of running a search infrastructure. This could lead to linear scaling of infrastructure, adding a node in a system would be as simple as sending a signal to master device, which could then distribute some work to new node. As query would always be a few-bytes, extra overhead of sending "query" to few extra nodes, and "combining" the "results" would almost always amortize!

We can download or digitize any personal dataset and can serve it just using a smartphone or a single-board computer. Distributing an index is easy as sharing a file. For example we can index QA from stack-overflow for a particular programming language, by using original question id as key during indexing and add our own filters or any custom interface to personalize the search experience. This way we also shift a lot of "query" work on the client side and free-up the central server to serve content only!

Code:

Full Code is available at https://github.com/eagledot/malhar under Apache 2.0 License.

Remarks:

Final Implementation reflects a very minimalistic API, with good enough defaults with the idea of making it very easy to add a very capable fuzzy search interface for any random dump of text. Ideas from different subfields of computer-science are fused together to in pursuit of a robust search experience. The discussed approach has allowed us to implement "search" as a spectrum, where "keyword search" lies on one extreme end, rather than some entity to be developed independently.

Earlier i was using a more verbose implementation of this idea, but i keep realizing that reducing the friction to adopt or integrate a new library is very crucial. Search affects almost every single experience we have on the internet and even on our own OS (Windows shows how hostile can it be) . Even more than capable companies like Netflix, Amazon or any e-commerce company, have incentives to try to keep default search really biased (profitable as they believe) .

But smaller, niche business could really benefit by putting some extra effort in their search interfaces. Not being able to find a product just because of a minor spelling mistake or in-complete description shouldn't be the norm.

Any active information-retrieval system must be capable of returning top-k results along with estimated probability/scores (atleast for internal-purposes) rather than a binary yes-no result. It would allow someone to actually debug and improve the search experience even without understanding the underlying ranking system upto a point.

I am also grateful that datasets like "commoncrawl" and "wikipedia" exists supporting an infinite amount of support for all kinds of research, but worried at the same time about the consequences of destruction being caused by "Generative AI". Amount of false/unverified information being generated has crossed the limits of "sustainable rectification".

Some ideas still remain unfleshed in my mind and could be used to make search experience more robust by handling an even larger amount of search diversity. I hope to work on them in future, if more users found this project as useful as i have found it so far !